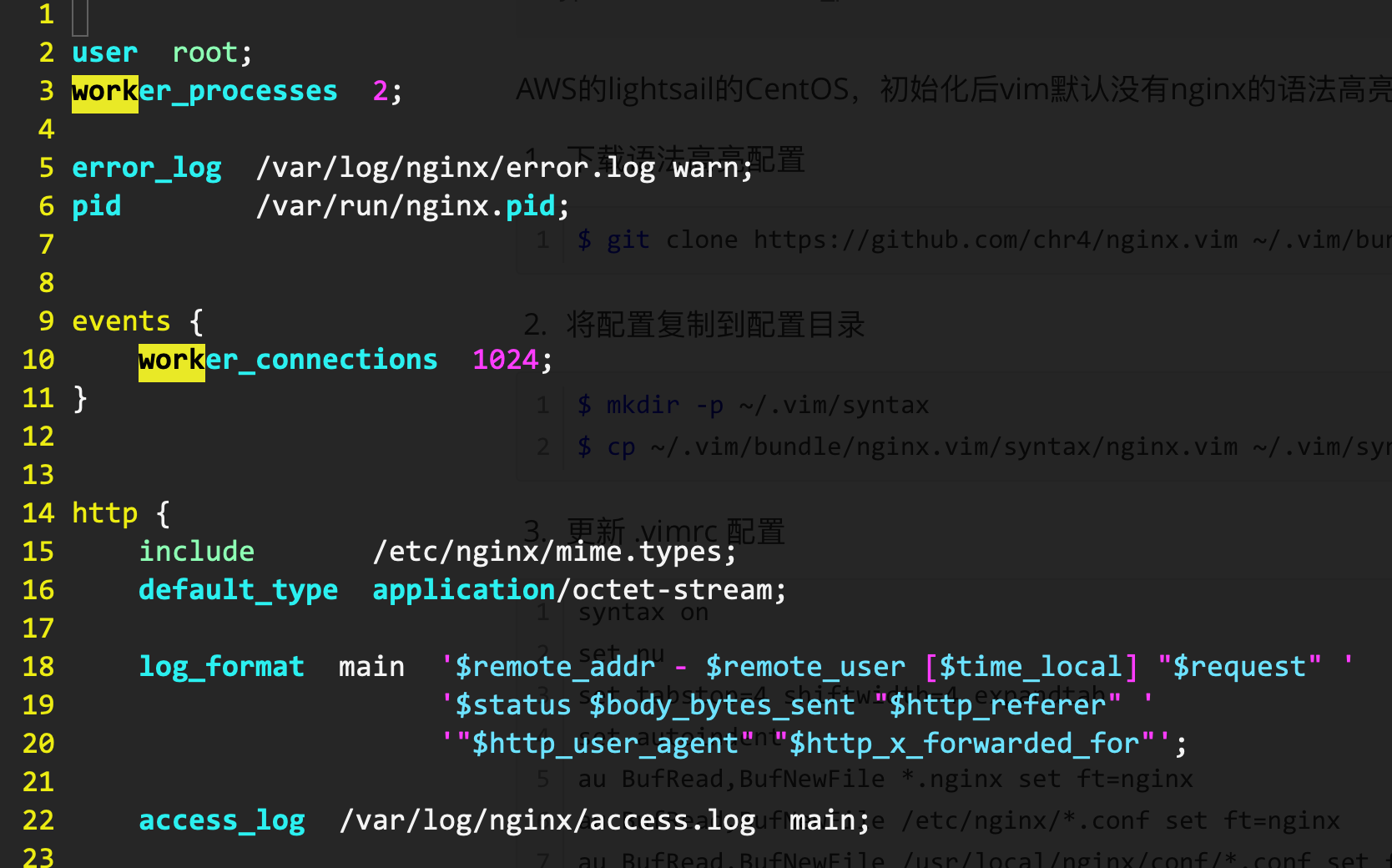

一直在犹豫,是在docker环境中配置nginx,还是直接在系统中配置。

在系统中配置nginx,不好的地方在于配置更新时,服务重启的管理并不方便。但性能无疑是最好的。

而在docker下,担心的则是性能问题。

有了具体的数值才有说服力,Apachebench无疑是个非常理想的工具。

数据对比 测试:并发1000,进行连接50000次。

端口:6000端口的是docker通过bridge模式映射的端口。7000端口是机器的nginx开放的端口。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 [root @localhost ~] This is ApacheBench, Version 2.3 <$Revision: 1430300 $ > Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/ Licensed to The Apache Software Foundation, http://www.apache.org/ Benchmarking localhost (be patient) Completed 5000 requests Completed 10000 requests Completed 15000 requests Completed 20000 requests Completed 25000 requests Completed 30000 requests Completed 35000 requests Completed 40000 requests Completed 45000 requests Completed 50000 requests Finished 50000 requests Server Software: nginx/1.17 .6 Server Hostname: localhost Server Port: 6000 Document Path: /index.html Document Length: 65313 bytes Concurrency Level: 1000 Time taken for tests: 11.422 seconds Complete requests: 50000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 49647 Total transferred: 3277698235 bytes HTML transferred: 3265650000 bytes Requests per second: 4377.71 [ Time per request : 228.430 [ms ] (mean )Time per request : 0.228 [ms ] (mean , across all concurrent requests )Transfer rate : 280249.94 [Kbytes /sec ] received Connection Times (ms ) min mean [+/-sd ] median max Connect : 0 30 269.2 0 3009 Processing : 16 164 741.1 75 9045 Waiting : 1 162 741.2 74 9044 Total : 17 193 979.4 75 11207 Percentage of the requests served within a certain time (ms ) 50 % 75 66 % 80 75 % 85 80 % 91 90 % 102 95 % 109 98 % 1022 99 % 6590 100 % 11207 (long est request ) [root @localhost ~] This is ApacheBench , Version 2.3 <$Revision: 1430300 $ >Copyright 1996 Adam Twiss , Zeus Technology Ltd , http ://www.zeustech.net /Licensed to The Apache Software Foundation , http ://www.apache.org / Benchmarking localhost (be patient )Completed 5000 requests Completed 10000 requests Completed 15000 requests Completed 20000 requests Completed 25000 requests Completed 30000 requests Completed 35000 requests Completed 40000 requests Completed 45000 requests Completed 50000 requests Finished 50000 requests Server Software : nginx /1.16 .1 Server Hostname : localhost Server Port : 7000 Document Path : /index.html Document Length : 65313 byte s Concurrency Level : 1000 Time taken for tests : 4.645 seconds Complete requests : 50000 Failed requests : 0 Write errors : 0 Keep -Alive requests : 49820 Total transferred : 3277699100 byte s HTML transferred : 3265650000 byte s Requests per second : 10764.18 [Time per request : 92.901 [ms ] (mean )Time per request : 0.093 [ms ] (mean , across all concurrent requests )Transfer rate : 689096.35 [Kbytes /sec ] received Connection Times (ms ) min mean [+/-sd ] median max Connect : 0 13 156.3 0 3010 Processing : 4 52 196.4 35 3229 Waiting : 0 50 196.5 34 3229 Total : 4 65 325.7 36 4636 Percentage of the requests served within a certain time (ms ) 50 % 36 66 % 45 75 % 48 80 % 49 90 % 54 95 % 58 98 % 67 99 % 1062 100 % 4636 (long est request )

疑惑 docker环境中的bridge模式网络性能有一定的损失是可以理解的,但50%的性能损失,和预期的数值不相符。

查看一下docker info

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root @localhost ~] Client: Debug Mode: false Server: Containers: 4 Running: 4 Paused: 0 Stopped: 0 Images: 12 Server Version: 19.03 .5 Storage Driver: overlay2 Backing Filesystem: xfs Supports d_type: true Native Overlay Diff : true Logging Driver: json-file Cgroup Driver: cgroupfs Plugins: Volume: local Network: bridge host ipvlan macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

很理想,xfs的filesystem,d_type设置为true,并且Storage Driver选择了overlay2。(赞AWS,这CentOS7的镜像默认对Docker已经支持这么好)

但始终使用的是CentOS7,内核版本偏低,难道性能的原因真的是这个吗?

当然,也有可能是我哪里配置不对,继续研究…

结论 为了性能,最终还是在机器上部署nginx,作为最外层的接入。但同时也在某些服务单独部署docker-nginx,可以更加灵活的更新。

因为大多数场景而言,nginx优秀的性能即便打了折扣,也是能完全满足业务要求的了。

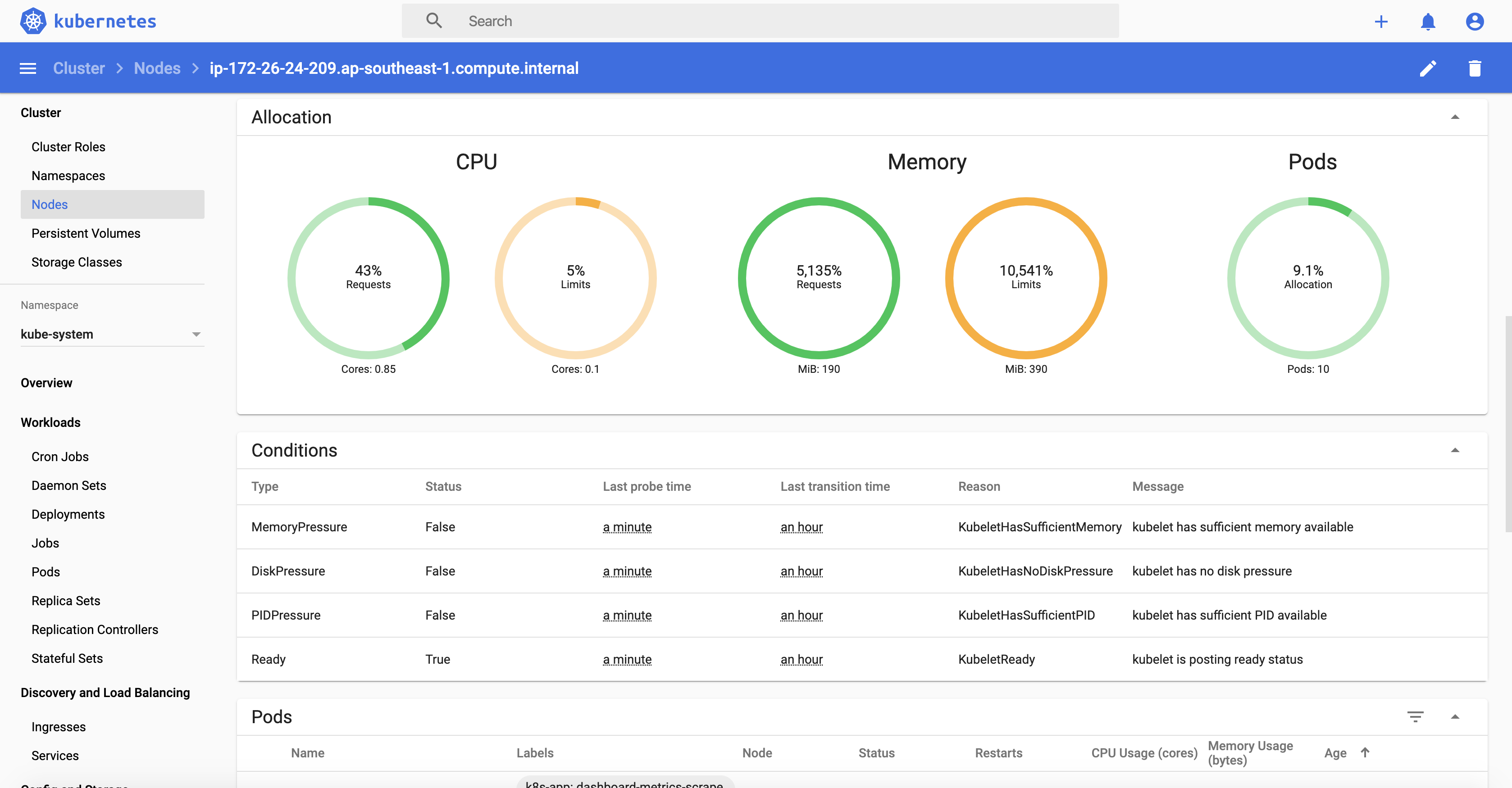

image-20191223002354519

image-20191223002354519