前置说明,文章里有些配置依赖了或参考了之前部分文章的配置,不会展开详细说明,有不清楚的可以点击下面的文章看看。

Amazon EFS提供外部访问数据卷

Kubernetes部署NFS-Provisioner为NFS提供动态分配卷能力

安装与配置 Gitea + Drone

配置 Gitea

一、首先创建 gitea-deployment.yaml,如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: gitea

namespace: YOUR_NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app: gitea

template:

metadata:

labels:

app: gitea

spec:

containers:

- name: gitea

image: gitea/gitea:latest-rootless

ports:

- containerPort: 3000

name: http

volumeMounts:

- name: static-efs-pvc

mountPath: "/var/lib/gitea"

subPath: "gitea"

- name: static-efs-pvc

mountPath: "/etc/gitea"

subPath: "gitea/configdir"

restartPolicy: "Always"

volumes:

- name: static-efs-pvc

persistentVolumeClaim:

claimName: static-efs-pvc

|

注意的是

- 镜像用的是 rootless的,非rootless的其实也是可以的,但是要多配置一些。rootless的简单。

- 数据挂载两个地方,一个是 /var/lib/gitea ,gitea的数据库所在的地方。另一个是 /etc/gitea ,主要是存储里面 /etc/gitea/app.ini 配置文件。

- 毕竟gitea存的是代码,数据不能丢。所以作者存储在 Amazon 的 EFS 文件系统里面。通过NFS 方式挂载。然后设置回收策略是Retain。

二、配置 Service 与 Ingress

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

apiVersion: v1

kind: Service

metadata:

name: gitea

namespace: YOUR_NAMESPACE

labels:

app: gitea

spec:

selector:

app: gitea

ports:

- port: 3000

targetPort: 3000

protocol: TCP

name: http

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: gitea-ingress

namespace: YOUR_NAMESPACE

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "100m"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

spec:

rules:

- host: YOUR_DOMAIN

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: gitea

port:

number: 3000

|

三、配置运行及数据备份恢复

1

2

3

| kubectl apply -f gitea-deployment.yaml

kubectl apply -f gitea-svc.yaml

kubectl apply -f gitea-ingress.yaml

|

打开域名,进入到首次安装的页面,git的配置默认参数就行。rootless的版本,使用的是内置git服务器。端口就是2222。其余的按自己需要填写。安装完成后,再次打开,看到主界面了。

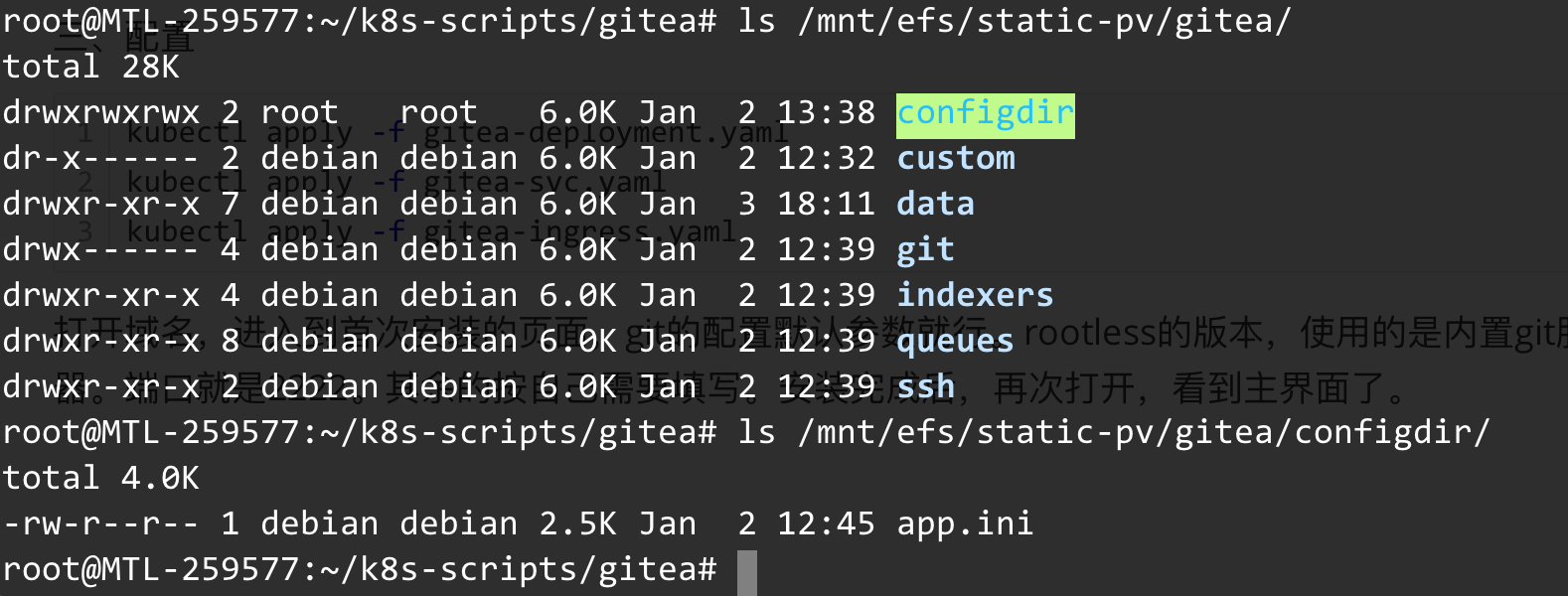

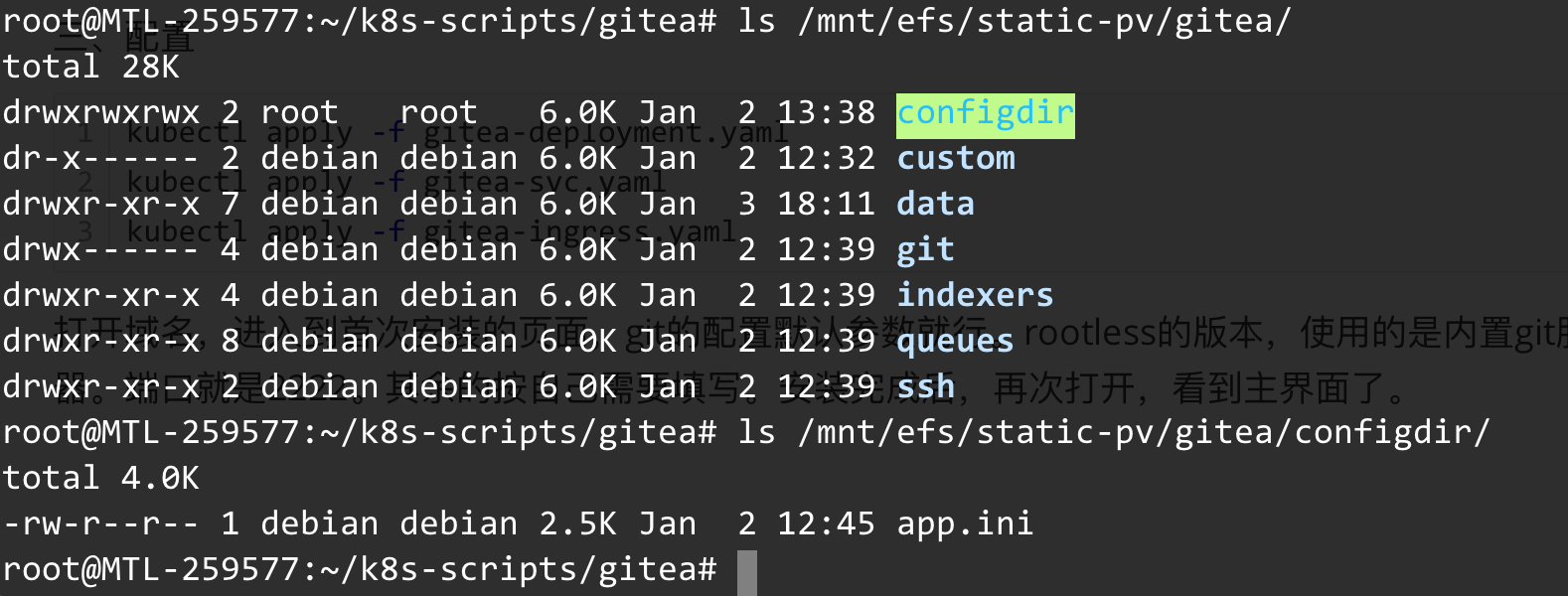

进入磁盘看一下文件

configdir目录里面会有 app.ini 的配置文件,这里后续可以自己去修改,然后重建pod就能生效。

data 目录存的是 gitea 的数据库

git 目录存的是仓库的数据。

数据备份与恢复

只需要整个数据目录复制走就行 , 注意,必须要使用 -p 参数,将目录权限也一并复制走。不然重建Pod后,文件权限不对无法加载。

1

| cp -r -p static-pv/gitea backup/

|

数据恢复,或者修改设置后重新运行,只需要重建Pod就行,例如执行

1

2

| kubectl delete -f gitea-deployment.yaml

kubectl apply -f gitea-deployment.yaml

|

配置 Drone

一、配置drone服务器,作者将drone的数据目录放在与gitea的同级目录中。存储目录结构如下

static-efs-pv

|– gitea

|– drone

这样备份数据与一同恢复也看着方便,个人喜好。

下面是配置 drone-deployment.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: drone

namespace: YOUR_NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app: drone

template:

metadata:

labels:

app: drone

spec:

containers:

- name: drone

image: drone/drone:1.10.1

env:

- name: DRONE_GITEA_SERVER

value: https://YOUR_GITEA_HTTP_DOMAIN

- name: DRONE_GITEA_CLIENT_ID

value: GITEA里面创建应用的CLIENT_ID

- name: DRONE_GITEA_CLIENT_SECRET

value: GITEA里面创建应用的CLIENT_SECRET(可以使用 secret 方式加载)

- name: DRONE_RPC_SECRET

value: RPC通信的SECRET (参考前面配置Drone的文章)

- name: DRONE_SERVER_HOST

value: drone服务器的域名

- name: DRONE_SERVER_PROTO

value: https

- name: DRONE_USER_CREATE

value: username:master,admin:true

ports:

- containerPort: 80

name: http

- containerPort: 443

name: https

volumeMounts:

- name: static-efs-pvc

mountPath: "/data"

subPath: "drone"

restartPolicy: "Always"

volumes:

- name: static-efs-pvc

persistentVolumeClaim:

claimName: static-efs-pvc

|

二、创建drone的rbac,以及 drone-runner

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: YOUR_NAMESPACE

name: drone

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- create

- delete

- apiGroups:

- ""

resources:

- pods

- pods/log

verbs:

- get

- create

- delete

- list

- watch

- update

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: drone

namespace: YOUR_NAMESPACE

subjects:

- kind: ServiceAccount

name: default

namespace: YOUR_NAMESPACE

roleRef:

kind: Role

name: drone

apiGroup: rbac.authorization.k8s.io

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: drone-runner

namespace: YOUR_NAMESPACE

labels:

app.kubernetes.io/name: drone-runner

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: drone-runner

template:

metadata:

labels:

app.kubernetes.io/name: drone-runner

spec:

containers:

- name: runner

image: drone/drone-runner-kube:latest

ports:

- containerPort: 3000

env:

- name: DRONE_RPC_HOST

value: 你的drone服务器域名

- name: DRONE_RPC_PROTO

value: https

- name: DRONE_RPC_SECRET

value: RPC通信的SECRET

- name: DRONE_RUNNER_CAPACITY

value: "2"

|

三、最后就是 Service 与 Ingress

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

apiVersion: v1

kind: Service

metadata:

name: drone

namespace: YOUR_NAMESPACE

labels:

app: drone

spec:

selector:

app: drone

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

- port: 443

targetPort: 443

protocol: TCP

name: https

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: drone-ingress

namespace: YOUR_NAMESPACE

spec:

rules:

- host: drone服务器的域名

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: drone

port:

number: 80

|

四、配置运行

1

2

3

4

5

| kubectl apply -f drone-rbac.yaml

kubectl apply -f drone-deployment.yaml

kubectl apply -f drone-svc.yaml

kubectl apply -f drone-ingress.yaml

kubectl apply -f drone-runner-deployment.yaml

|

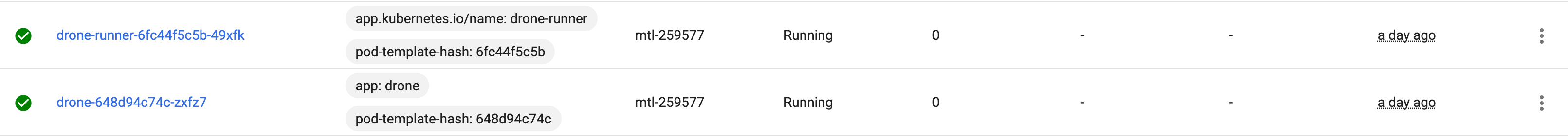

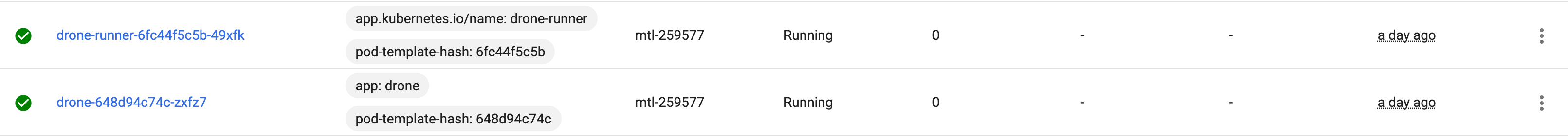

观察Pod是否成功创建

简单创建一个任务,看看是否能正确运行。Pipeline的方式需要使用Kubernetes的。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

---

kind: pipeline

type: kubernetes

name: test-pipeline

metadata:

namespace: YOUR_NAMESPACE

service_account_name: default

workspace:

path: /homespace/src

volumes:

- name: outdir

temp: {}

steps:

- name: Step-1

image: appleboy/drone-ssh

settings:

host: myhost

ssh_username: root

ssh_key:

from_secret: secret

script:

- echo 'hello'

|

加载运行,能看到Cluster中,会自动创建一个drone的Pod来处理任务。任务结束后,会自动销毁这个Pod。不过这个kube-runner的通信效率,只能说比 docker-runner 要慢得不少。不过还行,自动化关注的是本身任务的执行过程与链路是否顺畅,kube-runner能提供更加稳定安全的环境才是更重要的。

配置 Registry

一、配置私有Docker Registry,htpasswd方式身份验证

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: docker-registry

namespace: YOUR_NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app: docker-registry

template:

metadata:

labels:

app: docker-registry

spec:

containers:

- name: docker-registry

image: registry:2.7.1

env:

- name: REGISTRY_HTTP_ADDR

value: ":5000"

- name: REGISTRY_STORAGE_FILESYSTEM_ROOTDIRECTORY

value: "/var/lib/registry"

- name: REGISTRY_STORAGE_DELETE_ENABLED

value: "true"

- name: REGISTRY_AUTH

value: "htpasswd"

- name: REGISTRY_AUTH_HTPASSWD_REALM

value: "Registry Realm"

- name: REGISTRY_AUTH_HTPASSWD_PATH

value: "/auth/htpasswd"

ports:

- containerPort: 5000

name: http

volumeMounts:

- name: static-efs-pvc

mountPath: "/var/lib/registry"

subPath: "docker-registry"

- name: secret-htpasswd

mountPath: "/auth"

restartPolicy: "Always"

volumes:

- name: static-efs-pvc

persistentVolumeClaim:

claimName: static-efs-pvc

- name: secret-htpasswd

secret:

secretName: docker-registry-htpasswd

defaultMode: 0400

|

注意的地方

- 数据挂载目录有两个,一个是 /var/lib/registry 数据存储的地方,另一个是 /auth 可以自己配置,用来指定 htpasswd 文件

- 作者这里通过本机创建 htpasswd 文件后设置在 Secret 中,通过通过挂载到文件

- htpasswd的生成注意加密的方式,不然会报密码错误。

1

2

3

4

5

| # 在centos中创建 htpasswd

yum install -y httpd-tools

htpasswd -b2B htpasswd 用户名 密码

# 然后将文件设置到 secret 中

kubectl --namespace YOUR_NAMESPACE create secret generic docker-registry-htpasswd --from-file=htpasswd

|

注意,如果是Debian系统,使用htpasswd注意加密方式,作者试过安装 apache2-utils中的htpasswd,加密方式是不同的,无法正常使用。

二、配置 Service 与 Ingress

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

apiVersion: v1

kind: Service

metadata:

name: docker-registry

namespace: YOUR_NAMESPACE

labels:

app: docker-registry

spec:

selector:

app: docker-registry

ports:

- name: http

port: 5000

targetPort: 5000

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: docker-registry-ingress

namespace: YOUR_NAMESPACE

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "500m"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

spec:

tls:

- hosts:

- registry的域名

secretName: secret-host-tls

rules:

- host: registry的域名

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: docker-registry

port:

number: 5000

|

因为作者是通过NodePort方式将Ingress暴露的。Ingress的443端口映射到本机的30443端口。因此外层的Proxy需要设置使用https的方式。(作者用的Cloudflare CDN -> Nginx -> Ingress)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| server {

listen 443 ssl http2;

server_name registry的域名;

add_header Strict-Transport-Security "max-age=63072000; includeSubdomains; preload";

ssl_certificate 域名证书.pem;

ssl_certificate_key 域名证书.key;

ssl_prefer_server_ciphers on;

ssl_protocols TLSv1.2 TLSv1.3;

location / {

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass https://127.0.0.1:30443;

}

}

|

同样, 通过kubectl 加载配置

1

2

3

| kubectl apply -f docker-registry-deployment.yaml

kubectl apply -f docker-registry-svc.yaml

kubectl apply -f docker-registry-ingress.yaml

|

通过浏览器打开 registry的域名,看到提示输入密码,输入密码后通过,就是成功了。

然后可以试试通过 docker login 后,push镜像 ~

结语

逐渐将所有的服务,数据都迁移到k8s的自建群集中。数据统一存储在EFS中,通过群集的PV来控制分配。这样对数据迁移扩展来说都非常方便。而且统一接入后,服务的互通互访变得更加方便。

参考资料:

https://hub.docker.com/r/gitea/gitea

https://hub.docker.com/r/jkarlos/git-server-docker/

https://kubernetes.io/zh/docs/tasks/configmap-secret/managing-secret-using-kubectl/